Being able to experiment and evaluate the impact of model or configuration changes prior to deploying into production environments is critical to being able to confidently launch new models or model changes. You can catch errors, identify scaling limits, and A/B test changes before they impact production systems and customer experience.

In an ideal world, decision model testing is a smooth process: test data sets are just ready to go (no need to spend hours gathering and normalizing data), model code is easy to deploy and run remotely, standard tests are easily run and repeatable, and results are provided in a consistent format that can be shared with collaborators directly. This is the dream and the dream is possible.

However, not all decision science teams can support a holistic testing framework that runs model tests in the same environment as their production code. This may be due to existing infrastructure investment or data handling requirements. But that doesn’t mean effective model testing workflows are a nonstarter. It means you can achieve the same goal with a different approach: the test bench.

In this blog post, we’ll explore decision model test bench approaches and why you might choose one over the other in the context of Nextmv’s platform. This post will focus on model testing in relation to existing production runs, but it is also possible (and recommended!) to conduct model tests prior to going live in production.

Decision model testing: A primer

Without the infrastructure and tooling to easily run tests and compare results, most decision model experimentation ends up being pretty ad hoc. It requires assembling (often fragmented) data, writing custom analysis code in a notebook on your local machine, setting up everything to run so you can perform comparisons, and then translating results (often screenshots pasted into slides) to share with stakeholders. It can be time consuming, hacky, hard to reproduce, and as a result not always practical to do under pressure.

To achieve that ideal state I mentioned in the introduction, there are several pieces you need:

Model management: In order to understand the results of a test, you need to first be clear as to what version of the model was used and how the model was configured to run (e.g., solver run duration or which constraints have been enabled or disabled). When multiple individuals are collaborating on the same model code, this becomes even more important.

Run logs and replay: A log of the inputs and outputs for historical runs, including metadata about the run (e.g., which version was run, what configuration was enabled, who made the run, when it was made, etc) allows for the ability to investigate failed runs and replay and iterate on successful ones. This is the basis for an experiment.

Input (or design) set management: When running an experiment, a representative set of data is desired and often the same sets of input data are used across experiments to make it easier to compare results. This input set is typically called a “design set”.

Historical and online tests: Different stages of development require different testing types. For example, ad hoc (or batch) tests help to quickly compare two different versions of a model and iterate during model development, whereas acceptance tests provide an additional layer of abstraction based on pre-defined KPIs that provide additional confidence to technical and non-technical stakeholders prior to launching a model change. Further online testing such as shadow or switchback testing can provide out-of-sample testing for models prior to or as part of a roll out.

Standard, shareable results: When proposing model changes to stakeholders, having standardized, shareable tables, charts, and other visualizations makes it easier to iterate and communicate these changes.

Some decision science teams choose to build, integrate, and maintain all of these pieces (plus the infrastructure to run it all on). Others seek out a DecisionOps platform like Nextmv instead, which includes all of these components in the same environment. This means testing can be done in the same execution environment as production runs, providing more confidence when launching model changes. Let’s look at this holistic approach and how it works.

End-to-end testing (and deployment) with Nextmv

In this scenario, Nextmv is used across the entire model lifecycle, from development to deployment. The main benefit to testing with this scenario is that you are testing your models in the same execution environment that you are running them in production.

This is often the simplest and most straightforward path for testing. We’ve seen this in the last-mile delivery space. The team manages input sets for each geographic region with data representative of a range of order volume scenarios to use when testing model changes and they’ve automated the running of acceptance tests from their Git workflows to make sure experimentation is built into their development process.

But as I mentioned before: not everyone can run their tests this way. Enter the test bench.

Decision model test bench: What is it and how to use Nextmv as one

The term “test bench” may conjure up images of a physical workbench, with tools for measuring and manipulating a device to manually verify its correctness. In decision modeling, a test bench is an environment that mimics production and is used for testing model changes prior to releasing them to production. It’s sometimes referred to as a ‘test harness’ in software, particularly when it’s not possible or feasible to test on the full production infrastructure and imitation infrastructure must be used (e.g., due to licensing costs, security concerns, or simply to speed things up).

Let’s look at two ways to use Nextmv as a test bench.

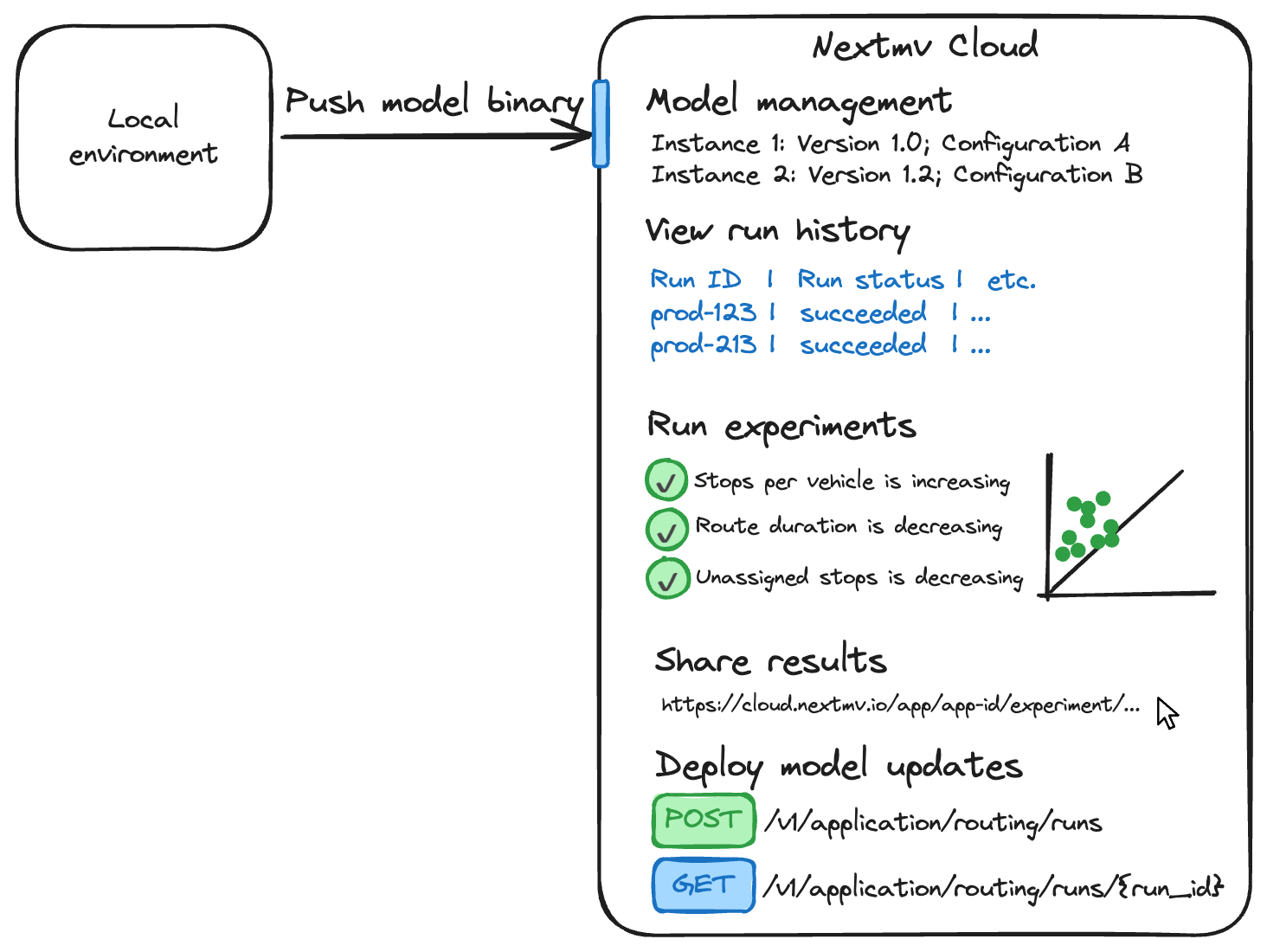

Nextmv for testing, model management, and run logging, while you handle production runs/deployment

Let’s say you’re a retail company solving a warehouse management problem. In the past, you’ve done lots of ad hoc scenario testing. This involved diagnosing a failure, knowing where to find the data, downloading a bunch of files, matching it up with production, and setting up a clean development space that represents what happened in production.

Your company already has dedicated infrastructure in place for production workloads, which you don’t want to change. But testing is important to you, and the current ad hoc process is cumbersome and inefficient.

In this scenario, Nextmv can be used to streamline the testing process by handling everything except the production deployment. The input and output from production runs are passed back to Nextmv via API calls, making it easier to spin up new experiments from production data simpler (no need to hunt down data from different systems every time).

With this approach, you still benefit from the pre-built experimentation, run history, model management, and collaboration tools while testing your models in an environment that is very close to your production environment. Now, production runs are automatically posted to Nextmv and available for immediate testing, run history allows team members to playback order data and set up “what if” experiments faster, and more people can onboard and access the test suite more efficiently than before.

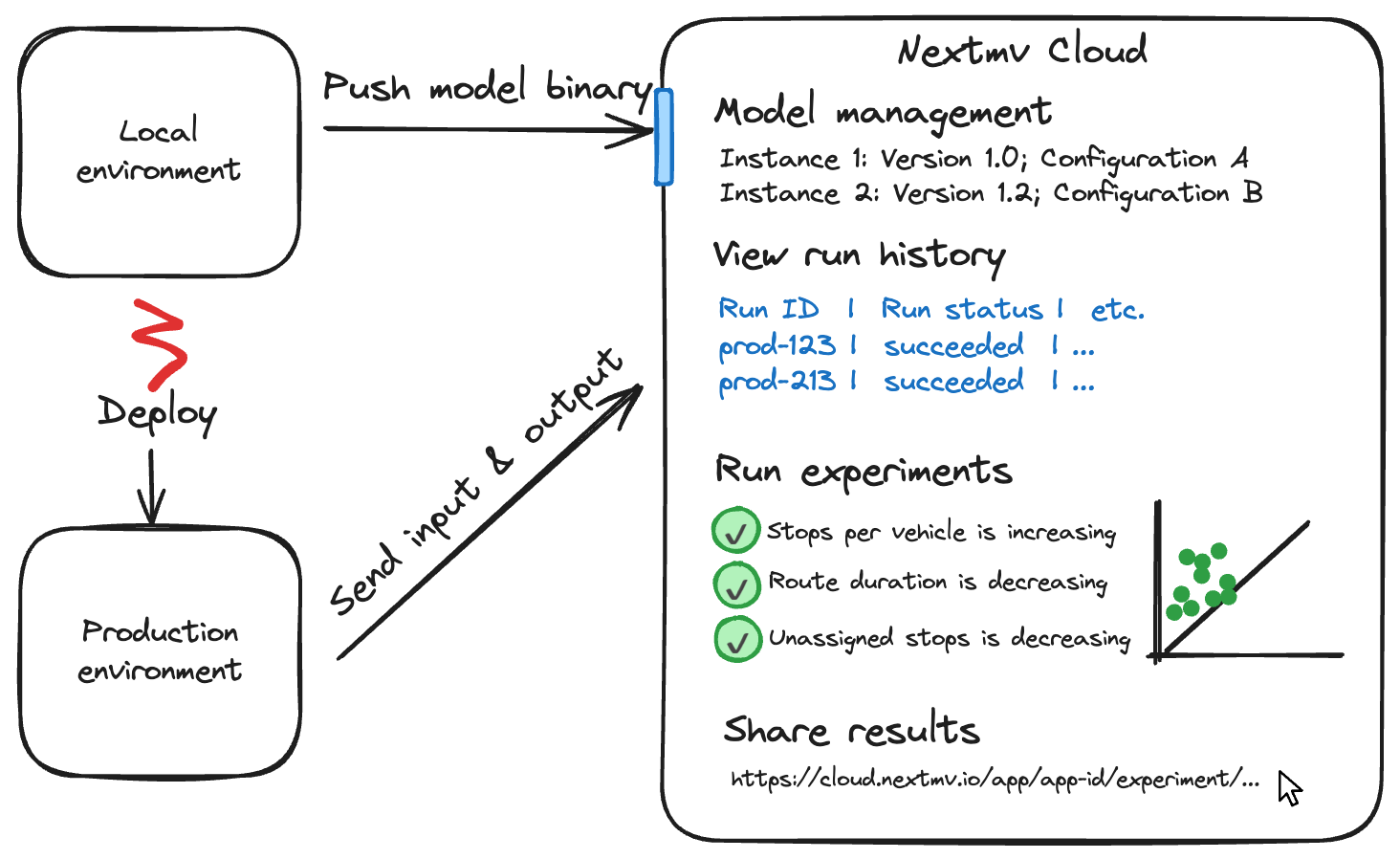

Nextmv for testing only, for when you have data handling requirements

Now, let’s say you’re at a large retailer with strict security requirements around production runs and data handling. You can neither run models outside your infrastructure nor pass the input and output from production runs to Nextmv. Can you still have a test bench?

In this scenario, Nextmv can be used for only running experiments and sharing results — only the output statistics and metadata from production runs are passed back to Nextmv via API calls and appended to experiments.

You’re still testing models in an environment that is very close to your production environment, you get all of the out-of-the-box experimentation tools (except for switchback testing, since those runs are external to Nextmv) and standardized results reporting, while still complying with (stricter) organizational requirements for managing data on your own infrastructure.

Where to go from here

Decision model testing provides important business value to many decision science and operations research teams. It guards organizations from making expensive mistakes, provides a clear and repeatable path to production, and gives stakeholders more confidence in model outcomes. But efficiently scaling these testing systems is a known pain point for many teams — and they’d (more often than not) like to invest in building more decision models, not more decision tools.

That’s why we’ve built Nextmv, and continue to evolve it to accommodate unique architecture setups. If you’d like to use Nextmv for testing and production runs, you can sign up for a free Nextmv account and follow along with our quick start guide. If you’re interested in using Nextmv as a test bench alongside an existing system, please contact us to discuss your requirements.